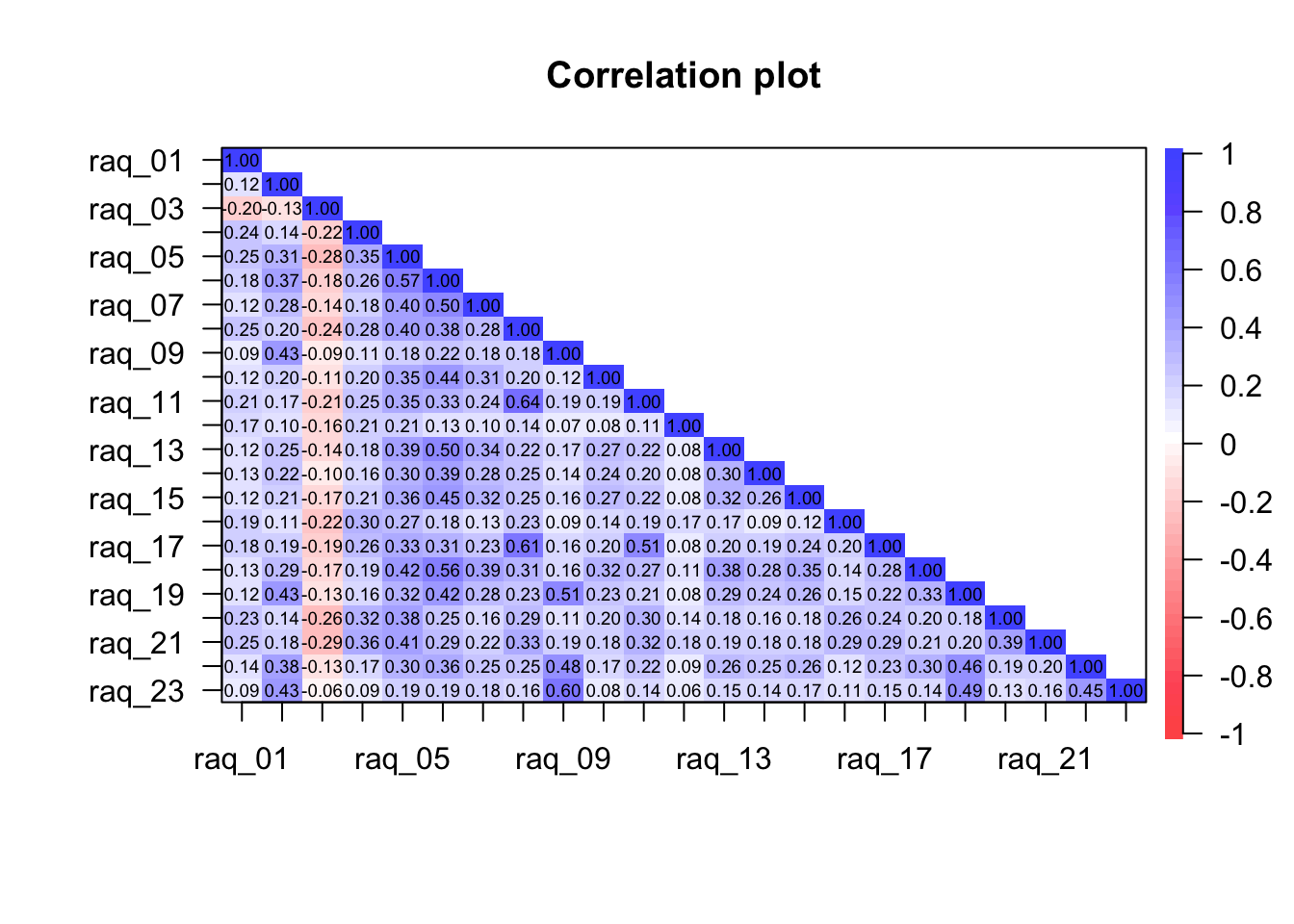

# Correlation Matrix (pearson-method)

Parameter1 | Parameter2 | r | 95% CI | t(2569) | p

----------------------------------------------------------------------

raq_01 | raq_02 | 0.11 | [ 0.07, 0.15] | 5.47 | < .001***

raq_01 | raq_03 | -0.18 | [-0.22, -0.14] | -9.21 | < .001***

raq_01 | raq_04 | 0.22 | [ 0.18, 0.25] | 11.26 | < .001***

raq_01 | raq_05 | 0.22 | [ 0.19, 0.26] | 11.60 | < .001***

raq_01 | raq_06 | 0.16 | [ 0.12, 0.20] | 8.17 | < .001***

raq_01 | raq_07 | 0.11 | [ 0.07, 0.14] | 5.41 | < .001***

raq_01 | raq_08 | 0.22 | [ 0.18, 0.26] | 11.53 | < .001***

raq_01 | raq_09 | 0.08 | [ 0.05, 0.12] | 4.26 | < .001***

raq_01 | raq_10 | 0.11 | [ 0.07, 0.15] | 5.70 | < .001***

raq_01 | raq_11 | 0.19 | [ 0.15, 0.23] | 9.76 | < .001***

raq_01 | raq_12 | 0.15 | [ 0.11, 0.19] | 7.67 | < .001***

raq_01 | raq_13 | 0.11 | [ 0.07, 0.15] | 5.55 | < .001***

raq_01 | raq_14 | 0.12 | [ 0.08, 0.15] | 5.87 | < .001***

raq_01 | raq_15 | 0.11 | [ 0.07, 0.15] | 5.61 | < .001***

raq_01 | raq_16 | 0.18 | [ 0.14, 0.21] | 9.04 | < .001***

raq_01 | raq_17 | 0.17 | [ 0.13, 0.20] | 8.52 | < .001***

raq_01 | raq_18 | 0.12 | [ 0.08, 0.16] | 6.06 | < .001***

raq_01 | raq_19 | 0.11 | [ 0.07, 0.14] | 5.45 | < .001***

raq_01 | raq_20 | 0.21 | [ 0.17, 0.24] | 10.73 | < .001***

raq_01 | raq_21 | 0.23 | [ 0.19, 0.26] | 11.81 | < .001***

raq_01 | raq_22 | 0.12 | [ 0.09, 0.16] | 6.30 | < .001***

raq_01 | raq_23 | 0.08 | [ 0.04, 0.12] | 3.93 | < .001***

raq_02 | raq_03 | -0.12 | [-0.16, -0.08] | -6.14 | < .001***

raq_02 | raq_04 | 0.12 | [ 0.09, 0.16] | 6.31 | < .001***

raq_02 | raq_05 | 0.28 | [ 0.24, 0.31] | 14.71 | < .001***

raq_02 | raq_06 | 0.34 | [ 0.30, 0.37] | 18.19 | < .001***

raq_02 | raq_07 | 0.26 | [ 0.22, 0.29] | 13.44 | < .001***

raq_02 | raq_08 | 0.18 | [ 0.14, 0.22] | 9.16 | < .001***

raq_02 | raq_09 | 0.39 | [ 0.36, 0.42] | 21.50 | < .001***

raq_02 | raq_10 | 0.18 | [ 0.15, 0.22] | 9.48 | < .001***

raq_02 | raq_11 | 0.15 | [ 0.11, 0.19] | 7.82 | < .001***

raq_02 | raq_12 | 0.09 | [ 0.05, 0.13] | 4.58 | < .001***

raq_02 | raq_13 | 0.23 | [ 0.19, 0.27] | 11.96 | < .001***

raq_02 | raq_14 | 0.20 | [ 0.16, 0.23] | 10.21 | < .001***

raq_02 | raq_15 | 0.19 | [ 0.15, 0.22] | 9.64 | < .001***

raq_02 | raq_16 | 0.10 | [ 0.06, 0.13] | 4.92 | < .001***

raq_02 | raq_17 | 0.17 | [ 0.14, 0.21] | 8.94 | < .001***

raq_02 | raq_18 | 0.26 | [ 0.23, 0.30] | 13.87 | < .001***

raq_02 | raq_19 | 0.39 | [ 0.36, 0.42] | 21.50 | < .001***

raq_02 | raq_20 | 0.13 | [ 0.09, 0.17] | 6.55 | < .001***

raq_02 | raq_21 | 0.16 | [ 0.12, 0.20] | 8.26 | < .001***

raq_02 | raq_22 | 0.34 | [ 0.30, 0.37] | 18.28 | < .001***

raq_02 | raq_23 | 0.39 | [ 0.36, 0.42] | 21.56 | < .001***

raq_03 | raq_04 | -0.19 | [-0.23, -0.16] | -10.01 | < .001***

raq_03 | raq_05 | -0.25 | [-0.29, -0.22] | -13.34 | < .001***

raq_03 | raq_06 | -0.17 | [-0.20, -0.13] | -8.51 | < .001***

raq_03 | raq_07 | -0.13 | [-0.16, -0.09] | -6.45 | < .001***

raq_03 | raq_08 | -0.22 | [-0.25, -0.18] | -11.33 | < .001***

raq_03 | raq_09 | -0.08 | [-0.12, -0.04] | -4.14 | < .001***

raq_03 | raq_10 | -0.10 | [-0.14, -0.06] | -5.18 | < .001***

raq_03 | raq_11 | -0.19 | [-0.23, -0.15] | -9.81 | < .001***

raq_03 | raq_12 | -0.15 | [-0.19, -0.11] | -7.58 | < .001***

raq_03 | raq_13 | -0.13 | [-0.17, -0.09] | -6.71 | < .001***

raq_03 | raq_14 | -0.09 | [-0.13, -0.05] | -4.45 | < .001***

raq_03 | raq_15 | -0.15 | [-0.19, -0.11] | -7.57 | < .001***

raq_03 | raq_16 | -0.20 | [-0.24, -0.17] | -10.56 | < .001***

raq_03 | raq_17 | -0.18 | [-0.21, -0.14] | -9.01 | < .001***

raq_03 | raq_18 | -0.15 | [-0.19, -0.11] | -7.78 | < .001***

raq_03 | raq_19 | -0.12 | [-0.15, -0.08] | -5.96 | < .001***

raq_03 | raq_20 | -0.23 | [-0.27, -0.20] | -12.20 | < .001***

raq_03 | raq_21 | -0.26 | [-0.30, -0.22] | -13.62 | < .001***

raq_03 | raq_22 | -0.12 | [-0.16, -0.08] | -6.00 | < .001***

raq_03 | raq_23 | -0.05 | [-0.09, -0.01] | -2.65 | 0.014*

raq_04 | raq_05 | 0.32 | [ 0.28, 0.35] | 17.08 | < .001***

raq_04 | raq_06 | 0.23 | [ 0.20, 0.27] | 12.21 | < .001***

raq_04 | raq_07 | 0.17 | [ 0.13, 0.20] | 8.56 | < .001***

raq_04 | raq_08 | 0.25 | [ 0.21, 0.28] | 12.96 | < .001***

raq_04 | raq_09 | 0.10 | [ 0.06, 0.14] | 4.98 | < .001***

raq_04 | raq_10 | 0.18 | [ 0.14, 0.21] | 9.11 | < .001***

raq_04 | raq_11 | 0.22 | [ 0.18, 0.26] | 11.54 | < .001***

raq_04 | raq_12 | 0.20 | [ 0.16, 0.23] | 10.12 | < .001***

raq_04 | raq_13 | 0.16 | [ 0.13, 0.20] | 8.42 | < .001***

raq_04 | raq_14 | 0.15 | [ 0.11, 0.18] | 7.55 | < .001***

raq_04 | raq_15 | 0.18 | [ 0.15, 0.22] | 9.50 | < .001***

raq_04 | raq_16 | 0.27 | [ 0.23, 0.31] | 14.25 | < .001***

raq_04 | raq_17 | 0.24 | [ 0.20, 0.27] | 12.36 | < .001***

raq_04 | raq_18 | 0.17 | [ 0.13, 0.21] | 8.81 | < .001***

raq_04 | raq_19 | 0.14 | [ 0.10, 0.18] | 7.24 | < .001***

raq_04 | raq_20 | 0.28 | [ 0.25, 0.32] | 15.00 | < .001***

raq_04 | raq_21 | 0.32 | [ 0.28, 0.35] | 17.12 | < .001***

raq_04 | raq_22 | 0.16 | [ 0.12, 0.19] | 8.07 | < .001***

raq_04 | raq_23 | 0.08 | [ 0.04, 0.12] | 4.22 | < .001***

raq_05 | raq_06 | 0.51 | [ 0.48, 0.54] | 30.26 | < .001***

raq_05 | raq_07 | 0.36 | [ 0.33, 0.40] | 19.79 | < .001***

raq_05 | raq_08 | 0.36 | [ 0.32, 0.39] | 19.39 | < .001***

raq_05 | raq_09 | 0.16 | [ 0.12, 0.20] | 8.28 | < .001***

raq_05 | raq_10 | 0.31 | [ 0.28, 0.35] | 16.67 | < .001***

raq_05 | raq_11 | 0.31 | [ 0.28, 0.35] | 16.61 | < .001***

raq_05 | raq_12 | 0.19 | [ 0.15, 0.22] | 9.66 | < .001***

raq_05 | raq_13 | 0.35 | [ 0.32, 0.38] | 19.00 | < .001***

raq_05 | raq_14 | 0.27 | [ 0.23, 0.30] | 14.05 | < .001***

raq_05 | raq_15 | 0.33 | [ 0.29, 0.36] | 17.54 | < .001***

raq_05 | raq_16 | 0.24 | [ 0.20, 0.28] | 12.53 | < .001***

raq_05 | raq_17 | 0.30 | [ 0.27, 0.34] | 16.01 | < .001***

raq_05 | raq_18 | 0.38 | [ 0.35, 0.41] | 20.97 | < .001***

raq_05 | raq_19 | 0.29 | [ 0.26, 0.33] | 15.50 | < .001***

raq_05 | raq_20 | 0.34 | [ 0.31, 0.37] | 18.36 | < .001***

raq_05 | raq_21 | 0.37 | [ 0.34, 0.40] | 20.24 | < .001***

raq_05 | raq_22 | 0.27 | [ 0.23, 0.31] | 14.26 | < .001***

raq_05 | raq_23 | 0.17 | [ 0.13, 0.21] | 8.70 | < .001***

raq_06 | raq_07 | 0.45 | [ 0.42, 0.48] | 25.68 | < .001***

raq_06 | raq_08 | 0.34 | [ 0.31, 0.37] | 18.36 | < .001***

raq_06 | raq_09 | 0.20 | [ 0.16, 0.24] | 10.40 | < .001***

raq_06 | raq_10 | 0.40 | [ 0.36, 0.43] | 21.93 | < .001***

raq_06 | raq_11 | 0.29 | [ 0.26, 0.33] | 15.55 | < .001***

raq_06 | raq_12 | 0.11 | [ 0.07, 0.15] | 5.74 | < .001***

raq_06 | raq_13 | 0.46 | [ 0.42, 0.49] | 25.90 | < .001***

raq_06 | raq_14 | 0.36 | [ 0.32, 0.39] | 19.25 | < .001***

raq_06 | raq_15 | 0.40 | [ 0.37, 0.43] | 22.20 | < .001***

raq_06 | raq_16 | 0.16 | [ 0.12, 0.20] | 8.21 | < .001***

raq_06 | raq_17 | 0.28 | [ 0.24, 0.32] | 14.83 | < .001***

raq_06 | raq_18 | 0.51 | [ 0.48, 0.54] | 30.01 | < .001***

raq_06 | raq_19 | 0.38 | [ 0.34, 0.41] | 20.64 | < .001***

raq_06 | raq_20 | 0.23 | [ 0.19, 0.26] | 11.74 | < .001***

raq_06 | raq_21 | 0.26 | [ 0.23, 0.30] | 13.86 | < .001***

raq_06 | raq_22 | 0.33 | [ 0.29, 0.36] | 17.48 | < .001***

raq_06 | raq_23 | 0.17 | [ 0.13, 0.21] | 8.85 | < .001***

raq_07 | raq_08 | 0.25 | [ 0.21, 0.28] | 13.03 | < .001***

raq_07 | raq_09 | 0.17 | [ 0.13, 0.20] | 8.48 | < .001***

raq_07 | raq_10 | 0.28 | [ 0.25, 0.32] | 14.94 | < .001***

raq_07 | raq_11 | 0.21 | [ 0.18, 0.25] | 11.02 | < .001***

raq_07 | raq_12 | 0.10 | [ 0.06, 0.13] | 4.89 | < .001***

raq_07 | raq_13 | 0.31 | [ 0.28, 0.35] | 16.58 | < .001***

raq_07 | raq_14 | 0.25 | [ 0.21, 0.29] | 13.16 | < .001***

raq_07 | raq_15 | 0.29 | [ 0.25, 0.32] | 15.28 | < .001***

raq_07 | raq_16 | 0.12 | [ 0.08, 0.16] | 6.21 | < .001***

raq_07 | raq_17 | 0.21 | [ 0.17, 0.25] | 10.94 | < .001***

raq_07 | raq_18 | 0.35 | [ 0.32, 0.38] | 18.93 | < .001***

raq_07 | raq_19 | 0.26 | [ 0.22, 0.29] | 13.41 | < .001***

raq_07 | raq_20 | 0.14 | [ 0.10, 0.18] | 7.17 | < .001***

raq_07 | raq_21 | 0.20 | [ 0.16, 0.23] | 10.19 | < .001***

raq_07 | raq_22 | 0.22 | [ 0.19, 0.26] | 11.69 | < .001***

raq_07 | raq_23 | 0.16 | [ 0.12, 0.20] | 8.24 | < .001***

raq_08 | raq_09 | 0.16 | [ 0.13, 0.20] | 8.45 | < .001***

raq_08 | raq_10 | 0.19 | [ 0.15, 0.22] | 9.58 | < .001***

raq_08 | raq_11 | 0.58 | [ 0.56, 0.61] | 36.28 | < .001***

raq_08 | raq_12 | 0.13 | [ 0.09, 0.17] | 6.58 | < .001***

raq_08 | raq_13 | 0.20 | [ 0.16, 0.24] | 10.38 | < .001***

raq_08 | raq_14 | 0.23 | [ 0.19, 0.27] | 12.01 | < .001***

raq_08 | raq_15 | 0.23 | [ 0.19, 0.27] | 11.97 | < .001***

raq_08 | raq_16 | 0.21 | [ 0.17, 0.24] | 10.69 | < .001***

raq_08 | raq_17 | 0.55 | [ 0.52, 0.57] | 33.20 | < .001***

raq_08 | raq_18 | 0.28 | [ 0.24, 0.31] | 14.68 | < .001***

raq_08 | raq_19 | 0.21 | [ 0.17, 0.25] | 10.86 | < .001***

raq_08 | raq_20 | 0.26 | [ 0.23, 0.30] | 13.76 | < .001***

raq_08 | raq_21 | 0.30 | [ 0.27, 0.34] | 16.15 | < .001***

raq_08 | raq_22 | 0.22 | [ 0.19, 0.26] | 11.68 | < .001***

raq_08 | raq_23 | 0.14 | [ 0.10, 0.18] | 7.25 | < .001***

raq_09 | raq_10 | 0.11 | [ 0.07, 0.14] | 5.38 | < .001***

raq_09 | raq_11 | 0.17 | [ 0.14, 0.21] | 9.01 | < .001***

raq_09 | raq_12 | 0.06 | [ 0.02, 0.10] | 3.07 | 0.007**

raq_09 | raq_13 | 0.15 | [ 0.11, 0.19] | 7.84 | < .001***

raq_09 | raq_14 | 0.12 | [ 0.09, 0.16] | 6.30 | < .001***

raq_09 | raq_15 | 0.15 | [ 0.11, 0.19] | 7.60 | < .001***

raq_09 | raq_16 | 0.08 | [ 0.04, 0.12] | 4.19 | < .001***

raq_09 | raq_17 | 0.14 | [ 0.10, 0.18] | 7.32 | < .001***

raq_09 | raq_18 | 0.15 | [ 0.11, 0.18] | 7.52 | < .001***

raq_09 | raq_19 | 0.46 | [ 0.43, 0.49] | 26.59 | < .001***

raq_09 | raq_20 | 0.10 | [ 0.06, 0.14] | 5.08 | < .001***

raq_09 | raq_21 | 0.17 | [ 0.13, 0.20] | 8.61 | < .001***

raq_09 | raq_22 | 0.43 | [ 0.40, 0.46] | 23.95 | < .001***

raq_09 | raq_23 | 0.55 | [ 0.52, 0.57] | 33.09 | < .001***

raq_10 | raq_11 | 0.17 | [ 0.13, 0.21] | 8.68 | < .001***

raq_10 | raq_12 | 0.08 | [ 0.04, 0.11] | 3.85 | 0.001**

raq_10 | raq_13 | 0.25 | [ 0.21, 0.28] | 12.99 | < .001***

raq_10 | raq_14 | 0.22 | [ 0.18, 0.26] | 11.38 | < .001***

raq_10 | raq_15 | 0.24 | [ 0.21, 0.28] | 12.68 | < .001***

raq_10 | raq_16 | 0.13 | [ 0.09, 0.17] | 6.59 | < .001***

raq_10 | raq_17 | 0.18 | [ 0.14, 0.21] | 9.12 | < .001***

raq_10 | raq_18 | 0.29 | [ 0.26, 0.33] | 15.43 | < .001***

raq_10 | raq_19 | 0.21 | [ 0.17, 0.25] | 10.97 | < .001***

raq_10 | raq_20 | 0.18 | [ 0.14, 0.22] | 9.23 | < .001***

raq_10 | raq_21 | 0.16 | [ 0.12, 0.20] | 8.34 | < .001***

raq_10 | raq_22 | 0.16 | [ 0.12, 0.19] | 7.98 | < .001***

raq_10 | raq_23 | 0.07 | [ 0.03, 0.11] | 3.53 | 0.003**

raq_11 | raq_12 | 0.10 | [ 0.07, 0.14] | 5.27 | < .001***

raq_11 | raq_13 | 0.20 | [ 0.16, 0.24] | 10.40 | < .001***

raq_11 | raq_14 | 0.19 | [ 0.15, 0.22] | 9.59 | < .001***

raq_11 | raq_15 | 0.20 | [ 0.16, 0.24] | 10.42 | < .001***

raq_11 | raq_16 | 0.17 | [ 0.14, 0.21] | 9.00 | < .001***

raq_11 | raq_17 | 0.47 | [ 0.43, 0.49] | 26.63 | < .001***

raq_11 | raq_18 | 0.24 | [ 0.20, 0.28] | 12.48 | < .001***

raq_11 | raq_19 | 0.19 | [ 0.16, 0.23] | 10.04 | < .001***

raq_11 | raq_20 | 0.27 | [ 0.23, 0.31] | 14.19 | < .001***

raq_11 | raq_21 | 0.29 | [ 0.25, 0.32] | 15.15 | < .001***

raq_11 | raq_22 | 0.20 | [ 0.16, 0.24] | 10.44 | < .001***

raq_11 | raq_23 | 0.13 | [ 0.09, 0.17] | 6.56 | < .001***

raq_12 | raq_13 | 0.07 | [ 0.03, 0.11] | 3.48 | 0.003**

raq_12 | raq_14 | 0.07 | [ 0.03, 0.11] | 3.50 | 0.003**

raq_12 | raq_15 | 0.07 | [ 0.03, 0.11] | 3.63 | 0.002**

raq_12 | raq_16 | 0.15 | [ 0.12, 0.19] | 7.87 | < .001***

raq_12 | raq_17 | 0.07 | [ 0.03, 0.11] | 3.71 | 0.002**

raq_12 | raq_18 | 0.10 | [ 0.06, 0.14] | 5.00 | < .001***

raq_12 | raq_19 | 0.07 | [ 0.03, 0.11] | 3.40 | 0.003**

raq_12 | raq_20 | 0.12 | [ 0.08, 0.16] | 6.27 | < .001***

raq_12 | raq_21 | 0.16 | [ 0.13, 0.20] | 8.42 | < .001***

raq_12 | raq_22 | 0.08 | [ 0.04, 0.12] | 4.05 | < .001***

raq_12 | raq_23 | 0.05 | [ 0.01, 0.09] | 2.70 | 0.014*

raq_13 | raq_14 | 0.27 | [ 0.24, 0.31] | 14.44 | < .001***

raq_13 | raq_15 | 0.29 | [ 0.25, 0.32] | 15.31 | < .001***

raq_13 | raq_16 | 0.15 | [ 0.11, 0.19] | 7.66 | < .001***

raq_13 | raq_17 | 0.18 | [ 0.14, 0.21] | 9.12 | < .001***

raq_13 | raq_18 | 0.34 | [ 0.31, 0.37] | 18.35 | < .001***

raq_13 | raq_19 | 0.26 | [ 0.22, 0.30] | 13.64 | < .001***

raq_13 | raq_20 | 0.16 | [ 0.12, 0.20] | 8.32 | < .001***

raq_13 | raq_21 | 0.17 | [ 0.14, 0.21] | 8.98 | < .001***

raq_13 | raq_22 | 0.23 | [ 0.19, 0.27] | 11.97 | < .001***

raq_13 | raq_23 | 0.14 | [ 0.10, 0.18] | 7.15 | < .001***

raq_14 | raq_15 | 0.23 | [ 0.20, 0.27] | 12.20 | < .001***

raq_14 | raq_16 | 0.08 | [ 0.05, 0.12] | 4.29 | < .001***

raq_14 | raq_17 | 0.17 | [ 0.13, 0.20] | 8.61 | < .001***

raq_14 | raq_18 | 0.26 | [ 0.22, 0.29] | 13.40 | < .001***

raq_14 | raq_19 | 0.22 | [ 0.18, 0.26] | 11.49 | < .001***

raq_14 | raq_20 | 0.15 | [ 0.11, 0.19] | 7.60 | < .001***

raq_14 | raq_21 | 0.17 | [ 0.13, 0.20] | 8.57 | < .001***

raq_14 | raq_22 | 0.22 | [ 0.18, 0.26] | 11.43 | < .001***

raq_14 | raq_23 | 0.13 | [ 0.09, 0.16] | 6.47 | < .001***

raq_15 | raq_16 | 0.11 | [ 0.07, 0.15] | 5.59 | < .001***

raq_15 | raq_17 | 0.21 | [ 0.18, 0.25] | 11.06 | < .001***

raq_15 | raq_18 | 0.32 | [ 0.29, 0.35] | 17.13 | < .001***

raq_15 | raq_19 | 0.23 | [ 0.19, 0.27] | 11.99 | < .001***

raq_15 | raq_20 | 0.16 | [ 0.12, 0.20] | 8.12 | < .001***

raq_15 | raq_21 | 0.17 | [ 0.13, 0.20] | 8.50 | < .001***

raq_15 | raq_22 | 0.24 | [ 0.20, 0.27] | 12.38 | < .001***

raq_15 | raq_23 | 0.15 | [ 0.11, 0.19] | 7.71 | < .001***

raq_16 | raq_17 | 0.18 | [ 0.14, 0.21] | 9.10 | < .001***

raq_16 | raq_18 | 0.12 | [ 0.09, 0.16] | 6.36 | < .001***

raq_16 | raq_19 | 0.14 | [ 0.10, 0.17] | 6.93 | < .001***

raq_16 | raq_20 | 0.23 | [ 0.20, 0.27] | 12.11 | < .001***

raq_16 | raq_21 | 0.26 | [ 0.23, 0.30] | 13.87 | < .001***

raq_16 | raq_22 | 0.11 | [ 0.07, 0.15] | 5.58 | < .001***

raq_16 | raq_23 | 0.10 | [ 0.06, 0.14] | 5.10 | < .001***

raq_17 | raq_18 | 0.25 | [ 0.22, 0.29] | 13.31 | < .001***

raq_17 | raq_19 | 0.20 | [ 0.16, 0.23] | 10.17 | < .001***

raq_17 | raq_20 | 0.22 | [ 0.18, 0.26] | 11.38 | < .001***

raq_17 | raq_21 | 0.26 | [ 0.22, 0.29] | 13.54 | < .001***

raq_17 | raq_22 | 0.21 | [ 0.17, 0.24] | 10.71 | < .001***

raq_17 | raq_23 | 0.14 | [ 0.10, 0.17] | 7.01 | < .001***

raq_18 | raq_19 | 0.30 | [ 0.26, 0.33] | 15.91 | < .001***

raq_18 | raq_20 | 0.18 | [ 0.14, 0.22] | 9.24 | < .001***

raq_18 | raq_21 | 0.19 | [ 0.16, 0.23] | 9.97 | < .001***

raq_18 | raq_22 | 0.27 | [ 0.23, 0.30] | 14.08 | < .001***

raq_18 | raq_23 | 0.13 | [ 0.09, 0.17] | 6.65 | < .001***

raq_19 | raq_20 | 0.16 | [ 0.13, 0.20] | 8.43 | < .001***

raq_19 | raq_21 | 0.18 | [ 0.15, 0.22] | 9.47 | < .001***

raq_19 | raq_22 | 0.42 | [ 0.39, 0.45] | 23.34 | < .001***

raq_19 | raq_23 | 0.44 | [ 0.41, 0.47] | 24.85 | < .001***

raq_20 | raq_21 | 0.35 | [ 0.31, 0.38] | 18.85 | < .001***

raq_20 | raq_22 | 0.17 | [ 0.13, 0.21] | 8.65 | < .001***

raq_20 | raq_23 | 0.11 | [ 0.08, 0.15] | 5.82 | < .001***

raq_21 | raq_22 | 0.18 | [ 0.14, 0.21] | 9.16 | < .001***

raq_21 | raq_23 | 0.14 | [ 0.10, 0.18] | 7.28 | < .001***

raq_22 | raq_23 | 0.40 | [ 0.37, 0.43] | 22.17 | < .001***

p-value adjustment method: Holm (1979)

Observations: 2571